Introduction

History of Artificial Intelligence is in the list of prominent innovation technologies that are redefining sectors, markets, and aspects of human life. However, we are yet to explain how we got to this point. It is crucial to always review the timeline of development to fully understand the possibilities that AI has today. This article takes a look at the History of Artificial Intelligence, from the prehistoric subject and pharaoh periods to the present day.

Early Concepts of AI

Ancient Myths and Legends

The History of Artificial Intelligence begins with the concept of developing AI or intelligent beings, which has always fascinated human beings for several years. The Old World myths and fables are rich in such characters of automata, constructed to replicate human skills. These early tales of fiction, for example, the myth of Pygmalion and Galatea, portray the ability and desire of humankind in making lifelike machines.

Philosophical Roots in the 17th and 18th Centuries

Mechanical reasoning, which forms an integral part of the History of Artificial Intelligence, was introduced in the 17th and 18th centuries by philosophers such as René Descartes and Gottfried Wilhelm Leibniz. Starting with Descartes’ view of man as an organic piece of machinery and Leibniz developing the binary code that is the essence of mathematics in relation to AI, it could be argued that these figures provided the prologue for the development of AI for the future.

The Birth of Modern AI

Al a Turing and the Turing Machine

The History of Artificial Intelligence in modern times may be traced back to the works of an English mathematician and logician, Alan Turing. In 1936, Turing established the theoretical model of the universal machine needed for any computation—the Turing Machine. He also put forth the idea of a test which came to be known as the Turing Test to establish whether a machine could actually mimic human intelligence.

The Dartmouth Conference of 1956

AI, in its modern sense, was officially declared at the Dartmouth Conference held in 1956 by John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon. This conference featured a group of innovative and creative scientists who thought it was possible to emulate human intelligence in machines. This was the venue of the first-ever conference held to coin the term Artificial Intelligence.

The First AI Programs

Logic Theorist and General Problem Solver

The first AI programs came into existence soon after the Dartmouth conference. The first one is the Logic Theorist which was developed by Allen Newell and Herbert A. Simon in 1955. The purpose of this program was to model problem solving capabilities similar to that of a human being by proving mathematical theorems. The success that was achieved with the creation of the Logic Theorist led to working on another program known as the General Problem Solver which is a more generic approach to problem solving.

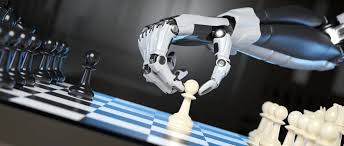

Early Chess Programs and Their Impact

Lux was able to discover that starting in the late 1950s and the early 1960s, scientists started constructing chess-playing programs, which acted as yardsticks for the advancement of artificial intelligence. These early programs if measured by today standards could only perform simple calculations but they proved that AI could solve tasks involving strategic decision making and planning.

The Rise of Machine Learning

Introduction to Machine Learning

The difficulties of hardcoding all potential decisions were soon identified as AI research proceeded Solving complex tasks for which decision-making means basically sketched. This realization paved way for machine learning a branch of AI that Concerns itself with creating algorithms that enables machines to learn from data and become better over time.

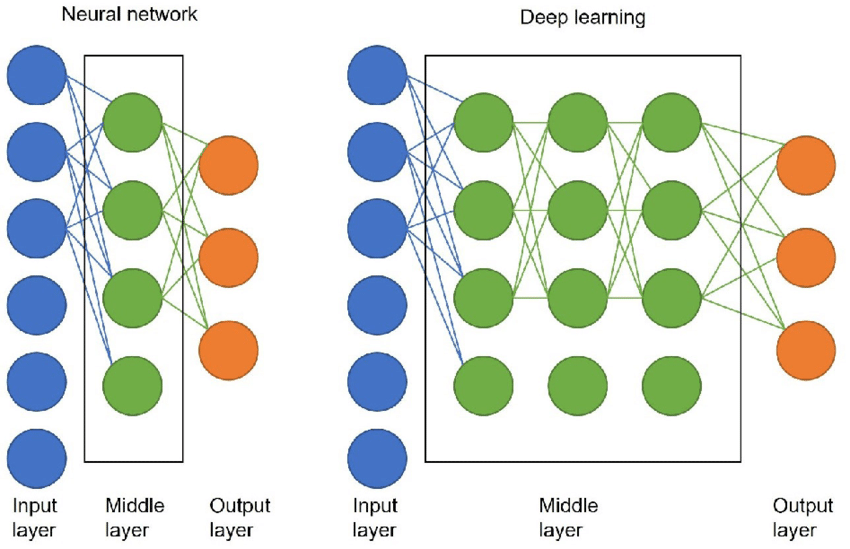

The Significance of Neural Networks

The development of neural networks based on the structure of the human brain shifted into the area of focus in machine learning. The idea of the neural networks was first put forward in the 1940s, however further developments in this field were observed only in the 1980s. These networks paved way for deep learning, which became the prevalent framework of most AI solutions today.

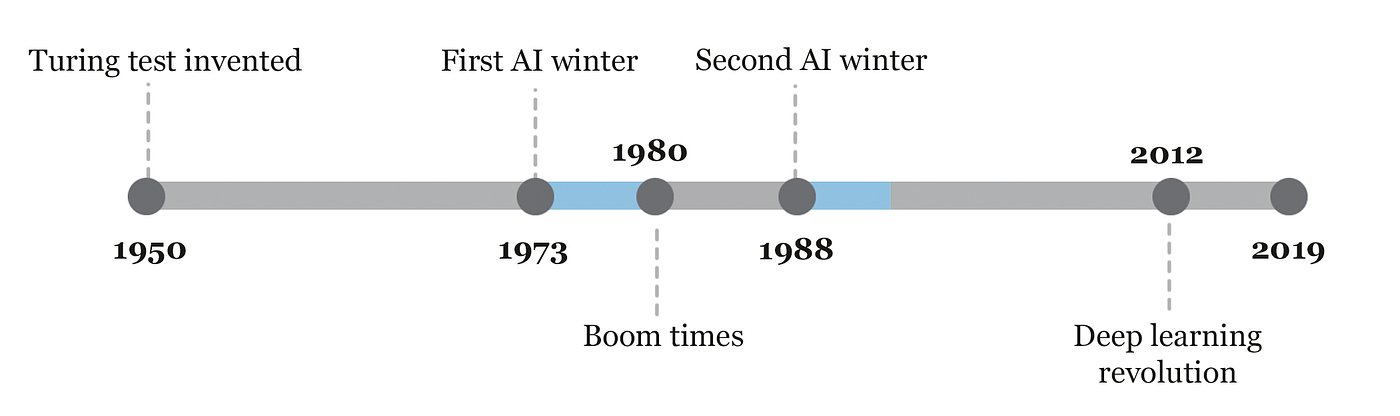

AI Winter

Reasons Behind the Decline in AI Research

The craze for AI started with elementary research work in the 1960s and 1970s and after that witnessed a phase called the AI Winter. This decline was mainly attributed to the optimism and the over reliance on AI as well as the underestimation of the hurdles surrounding AI. With the newfound disillusionment from the AI project both the public and the government stopped putting their money where their mouths were.

Impact on Funding and Public Interest

These problems led to unpopularity of AI in the media, reduction of available funding, and abandonment of many research projects during the so-called AI Winter. But at the same time this period offered lessons that were beneficial for the development of the AI future with more reasonable expectations and focus on solving actual problems.

Resurgence of AI

Development of Expert Systems

A significant chapter in the History of Artificial Intelligence began in the 1980s, mainly due to the appearance of expert systems. These are computer-based information systems designed to emulate the decision-making functionality of a professional in a certain discipline. They were used in areas like medicine, finance, and engineering, among others. The success of expert systems paved the way towards realizing the potential of AI and its applications in the real world.

The Rise of Computational Power and Data Availability

The late 20th century also marked a crucial period in the History of Artificial Intelligence due to the availability of necessary resources in terms of computational capability and data needed to revive AI. The presence of high-powered processors and the abundant availability of digital data meant that new and far more sophisticated models of AI could be created and researched.

The Advent of Big Data and AI

How Big Data Revolutionized AI

Data mining, a vital process in the History of Artificial Intelligence, involves extracting information from large datasets using advanced software tools. The term big data describes digital data gathered in large volumes. The presence of big data gave a major boost to AI as it supplied the kindling for the ML algorithms to learn and develop. This integration of AI and big data has made it possible to achieve significant improvements in features including natural language processing, image recognition, and even the use of predictive analytic features.

The Role of Cloud Computing

The advancement of Artificial Intelligence has also benefited from cloud computing due to its scalable computing resources. Companies like Google, Amazon, and Microsoft have used cloud technology to implement mass applications of AI, allowing AI solutions to become accessible to companies and developers globally.

AI in the 21st Century

AI’s Integration into Everyday Life

The History of Artificial Intelligence in the 21st century is familiar to most people due to its integration into several aspects of society. Starting from virtual assistants such as Siri and Alexa to recommendation systems in Netflix, Amazon, or any other online platform, AI has infiltrated society. This integration has not only made our lives more convenient but has also transformed industries by enabling personalized services and automating routine tasks.

Key Applications in Different Industries

The History of Artificial Intelligence reveals its integration into the terminals of many industries to improve performance and bring new ideas along with it. In healthcare, the use of AI in diagnosing diseases is enabling doctors to diagnose more accurately. In finance, AI algorithms are used to assess fraud and in high-speed trading. In manufacturing, with the help of AI, robots are gaining efficiency and reducing costs.

Deep Learning and Neural Networks

Advancements in Deep Learning

machine learning has been a game changer in the AI field or more specifically deep learning. Due to the possibility of creating multi layered neural networks deep learning models can analysis big amounts of the data and define essential patterns. It has some important implications such as enhanced image recognition, speech processing and auto mobile industry with self driven cars and so on.

The Impact of AI in Fields Like Healthcare and Finance

The use of deep learning in healthcare field has enabled the invention of AI systems that can be used to diagnose diseases including cancer and heart disease among others with great efficiency. In the world of finance AI models are being employed in the prediction of the market trend the best portfolio to invest in and handling of risks. These developments do not only enhance the prognosis but also create new areas for development in these sciences.

AI and Robotics

The Convergence of AI and Robotics

That is why now the AI is merging with robotics, which creates new opportunities in the field of automation. Robots controlled by AI are being utilized in production transportation as well as in the homes. Partial automation and full automation, robotic surgery and, AI-controlled drones are some examples of how this fusion is impacting sectors and augmenting human ability.

Autonomous Vehicles and Robotic Surgery

Self-driving cars in particular that are self-activated by Artificial Intelligence are predicted to transform transport by minimizing accidents as well as enhancing convenience. In the same way robotic surgery is improving accuracy and spiking post-operation recovery of patients as a paradigm shift in the medical domain.

Ethical Considerations in AI

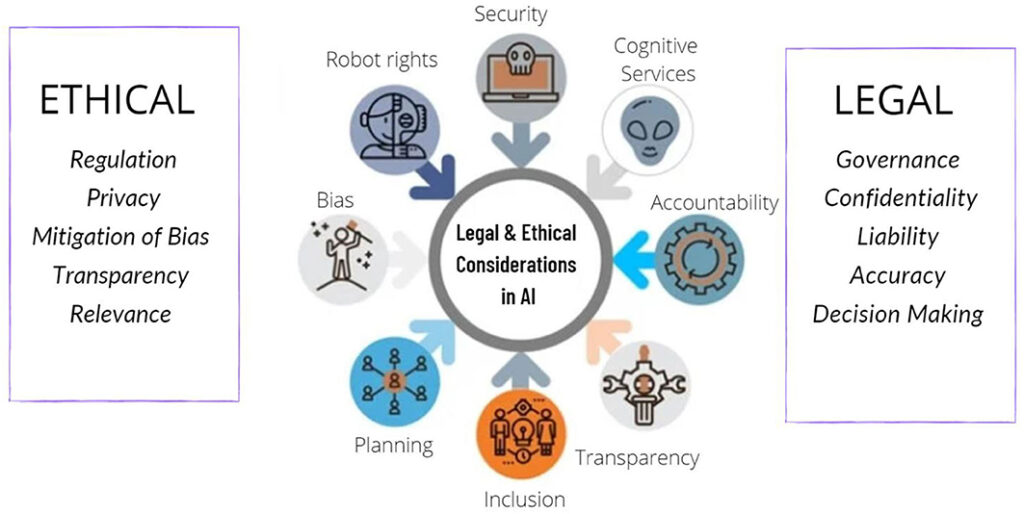

The Importance of Ethical AI Development

In AI ongoing development there are numerous concerns that must be observed, and they all portray a print of ethical issues. It is imperative that measures be put in place to ensure that the development and deployment of AI is safe so that such technologies do not negatively impact societies and that the benefits accruing from the adoption of the technology are fairly shared

Addressing Bias, Privacy, and Job Displacement

Another limitation that threatens the effective use of AI is the consideration of prejudice in one’s decision-making since the algorithms developed can result in unfair results. Issues of privacy are also important because many AI applications are based on the processing of large amounts of personal information. Also the risk of AI in replacing humans is a challenge that has to managed well to reduce the social impact factors.

The Future of AI

Predicting Future Advancements

As for where Artificial Intelligence is headed we find ourselves in a realm filled with opportunities. They also pointed out that as AI grows and develops, there will undoubtedly be intelligent systems which people can not perform. Thus starting from robotics to AI based creative solutions of problems the world has a bright future in terms of new developments.

The Potential Impact on Society and the Economy

It must also be said that the progress of AI in the future will affect society and the economy more regularly. As much as AI has the ability to alleviate some of the major issues confronting the globe it has some drawbacks which are worthy of consideration. Managing the opportunities and the threats associate with the use of AI will be important as the world moves ahead towards and even more AI dependent world.

Conclusion

The History of Artificial Intelligence and its evolution is quite an interesting story, which can be compared with a mythological tale, but it is a mythological tale of the present reality. From this perspective, it is clear that the future will not be free of difficulties and threats, but the experience that we gained in the course of AI development will help us to avoid mistakes and take advantage of the opportunities that will come. As we all see, Artificial Intelligence has a great future ahead, and if it is used with proper planning, it can change the world..

FAQs

1. What is the origin of AI?

AI as an idea was derived from myths and stories, but as a branch of study it was developed during the twentieth century especially with the deviation of AI rite by Alan Turing and the formal creation of AI as a field of study with the Dartmouth Conference of 1956.

2. Who are the key figures in AI history?

Key figures in AI history include Alan Turing, John McCarthy, Marvin Minsky, and Herbert A. Simon, all of whom made significant contributions to the development of AI as a scientific discipline.

3. What caused the AI Winter?

The AI Winter was caused by unrealistic expectations and overhyped promises that AI could not deliver on leading to a decline in funding and interest in AI research during the 1970s and 1980s.

4. How is AI impacting industries today?

AI is transforming industries by improving efficiency, reducing costs, and enabling new capabilities. It is widely used in healthcare, finance, manufacturing, and many other sectors.

5. What are the ethical concerns surrounding AI?

Ethical concerns in AI include issues related to bias in AI algorithms, privacy concerns, the potential for job displacement, and the need for responsible AI development to ensure equitable benefits.